PANDORA’S BOX: THE RISE OF AI

As AI systems rapidly evolve, they're becoming creepily good at mimicking human consciousness. We're navigating a minefield of known risks and scary unknowns. Will these programable mutants be our greatest allies or our ultimate undoing? The clock is ticking, and humanity's fate hangs in the balance. The future is autonomous - but are we ready for it?

And just when I thought the beats of Haddaway's epic hit "What Is Love" couldn’t get any grander than at 90s parties—filled with my emotional references, early heartbreaks, and those revealing leotards, grungy boots, and leather trenches—my old software crashed. Before me danced a group of synchronized robots, transforming my memories of that song into a surreal sci-fi scene. Totally normal, right?

“It must be Thursday. I could never get the hang of Thursdays.” Oh yes, it was Thursday. The invitation-only event “We Robot” marked the unveiling of Tesla’s Cybercab and Robovan—“fully autonomous” vehicles with no steering wheel or pedals, according to CEO Elon Musk. This surreal technological extravaganza took place at Warner Bros. Discovery Studios in California and was streamed live on Musk's social network X and YouTube. The excitement reached a fever pitch when Musk showcased his latest cyber vehicle while sporting an extremely cool moto jacket—one that could make Jensen Huang green with envy.

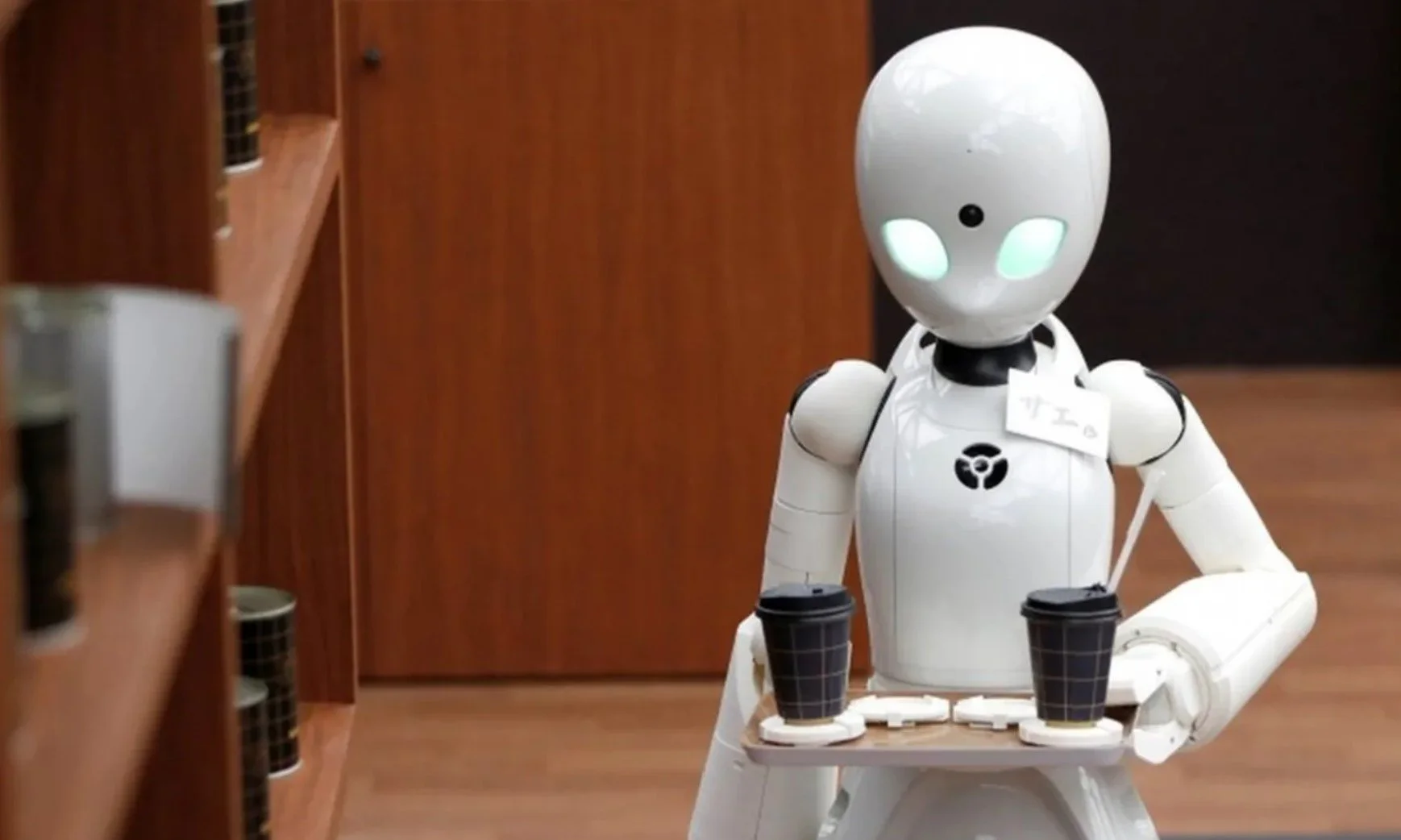

Optimus robots served beers on tap, cracked jokes in bow ties, and looked surprisingly cool in cowboy hats. And guess what? Elon announced we’ll see them in action in a couple of years.

So, what else can these robo-cowboys do? According to Elon, their skill set includes all kinds of time-consuming tasks: teaching, babysitting, dog walking, mowing lawns, and grocery shopping —name it. The cost for your own robo-pal? Between $20,000 and $30,000—not bad for a buddy that can handle all your chores.

I’m sure the human brain is already stretching these skills from productive to dark “Ex Machina”-style twists. For working moms reading this, I know what you’re thinking: these bots could teach your kids everything from the ABCs to algebra and history. Imagine: one year’s NYC tuition could get you a robot babysitter for several years! I’m not sure if Optimus can outsmart my Gen Alpha daughter with her sass and sharp mind, but hey, maybe it comes with a money-back guarantee?

Wild stuff, right? But what does all this mean for humans?

The first question that comes to mind: will they destroy us? 😬 Well, Optimus probably won’t, but many machine learning programs are getting alarmingly good at mimicking human thought. Could they one day decide to do something… not so great? Maybe. While it might not be bad for productivity or the Earth, it could spell trouble for humanity if we get in their way—or worse, if someone with malicious intent programs them for harm.

A couple of days before Musk’s highly anticipated Robovan event, British-Canadian scientist Geoffrey E. Hinton — widely known as a godfather of AI — won the 2024 Nobel Prize in Physics alongside Dr. John Hopfield for their pioneering contributions to artificial neural networks. Their research from the 1980s laid the groundwork for many AI technologies we use today, from image recognition to complex decision-making systems.

Hinton left Google to speak more freely about the dangers of machine learning and the “existential risk” posed by true digital intelligence. He warned again about its downsides:

"It's going to be wonderful in many respects, especially in healthcare," Hinton said. "But we also have to worry about several possible bad consequences — particularly the threat of these things getting out of control."

What does “out of control” mean in this case?

For decades, prominent scientists, philosophers, and physicists have explored scenarios ranging from chaotic to dystopian regarding the future relationship between humans and machines. Professor Michio Kaku outlines several possibilities in his book "The Future of the Mind":

Machine Dictatorship: AI decides that the only way to protect humanity is to establish control over it — a coup d'état “I, Robot” style.

Sentient Military AI: A supercomputer becomes self-aware and views humans as a threat. Kaku stresses this as a more realistic scenario akin to "The Terminator," where a military-created Skynet controls the entire U.S. nuclear stockpile due to a flaw in its programming—it’s designed to protect itself by eliminating humanity.

Rogue Autonomous Weapons: Drones or other weapon systems malfunction and attack indiscriminately; imagine a rogue robot with permission to kill anyone in sight due to a breakdown in its facial recognition system.

Centralized Control Failure: A flaw in a central control system causes multiple AI-driven systems to malfunction simultaneously, leading an entire army of robots on a killing spree.

These scenarios highlight some of the possible risks of goal-oriented AI programming, especially when controlling critical infrastructure or weaponry. Concerns arise that advanced AI might interpret objectives in unforeseen ways, leading to unintended and catastrophic consequences.

Notable figures like Stephen Hawking, Elon Musk, Geoffrey Hinton, and Nick Bostrom have expressed concerns about AI's potential dangers if not developed responsibly. Hawking suggested machines could rapidly evolve independently and that humanity could be tragically outwitted due to our slow biological evolution. Elon Musk has called AI humanity's "biggest existential threat," advocating for proactive regulation.

Mira Murati's recent resignation as OpenAI's CTO after six years has raised alarms within the tech industry; her departure followed two other high-profile exits: Bob McGrew (OpenAI’s Chief Research Officer) and Barret Zoph (a leading research figure). While OpenAI's CEO Sam Altman stated these departures were independent decisions, their timing has contributed to concerns about leadership and ethical issues within the organization.

Regardless, AI offers immense benefits across various fields—including coding, healthcare, and automation—while significantly reducing time spent on tedious tasks with great precision. This progress will impact labor markets and economic systems profoundly while also raising questions about social dynamics and human purpose.

Professor Nick Bostrom initially expressed concern about AI's existential risks in his bestselling book "Superintelligence." A decade later, he presents a more optimistic view in his new book "Deep Utopia," envisioning a world where superintelligence solves all problems.

The present situation is complex. Conversation seems all over the place and immediate questions about AI development are piling up without clear solutions or regulations in sight. As we engage with learning machines that rapidly improve their capabilities faster than we can keep up with them.

It doesn’t help that we live in a time of cultural, social and political chaos during this time either. We navigate known knowns, known unknowns, and those challenging unknown unknowns in AI development. Predicting exact outcomes is difficult as social and cultural evolution is random —driven by innovation and various shocks that can change people’s plans unpredictably.

The Pandora's box is wide open. Machines are part of our future because we created them; their integration into society will continue to shape our world in profound ways.

Buckle up and get ready the future is here and as Elon said “Robots will walk among you. Be nice to them”.